In an era defined by instantaneous insights and ultra‑low latencies, edge computing has emerged as a transformative force reshaping how enterprises collect, process, and act upon data. By relocating compute power closer to where data is generated—whether in factories, retail outlets, or autonomous vehicles—edge architectures empower organizations to deliver richer customer experiences, optimize operations, and unlock new revenue streams. This deep‑dive article explores the foundational concepts of edge computing, its technical building blocks, key benefits, real‑world applications, implementation challenges, and future trajectories.

Edge computing represents a paradigm shift away from centralized cloud‑only models toward a distributed fabric of compute nodes located at or near data sources. Unlike traditional cloud architectures—where raw data must travel over the internet to remote data centers for processing—edge systems handle computation on local devices such as gateways, micro data centers, or even embedded modules within sensors.

Latency Reduction

Data no longer traverses long-haul networks; insights are generated in milliseconds, enabling use cases like real‑time anomaly detection and safety‑critical control.Bandwidth Optimization

By filtering, aggregating, and preprocessing data on the edge, organizations dramatically curtail the volume of traffic sent to central clouds, reducing operational expenses.Resilience and Availability

Local compute continues functioning even if the connection to the central cloud is intermittent, ensuring mission‑critical applications remain online.

Core Components of an Edge Architecture

A robust edge computing deployment comprises several interlocking layers:

Edge Devices (Sensors & Actuators): These generate raw data—temperature readings, video streams, machine vibration metrics—and can execute lightweight inference with embedded AI accelerators.

Edge Gateways and Micro Data Centers: Acting as aggregation points, these nodes provide enhanced compute, storage, and networking, coordinating multiple devices and enforcing security policies.

Network Fabric: High‑speed, low‑latency links—often leveraging 5G or private fiber—carry data between local sites and central clouds.

Orchestration & Management Plane: Software platforms handle deployment, monitoring, scaling, and updates across hundreds or thousands of edge nodes, ensuring consistency and compliance.

Integration with Cloud & AI Services: Seamless data flow into centralized analytics platforms and AI/ML pipelines enables advanced modeling, long‑term trend analysis, and cross‑site comparisons.

Key Benefits of Edge Computing

Edge computing offers a spectrum of advantages that directly impact business performance and user satisfaction:

A. Ultra‑Low Latency Decisioning

Immediate responses in applications such as augmented reality, robotics, and autonomous driving rely on sub‑10ms processing.

B. Cost‑Effective Bandwidth Usage

Local preprocessing curtails unnecessary data transmission, slashing monthly connectivity fees and storage overhead.

C. Enhanced Data Privacy & Security

Sensitive information—like patient vitals or proprietary manufacturing metrics—can be anonymized or encrypted at the edge before transit.

D. Scalable Distributed Architecture

Organizations can incrementally add new edge sites without overhauling existing cloud infrastructure.

E. Regulatory Compliance

Data sovereignty laws often mandate that certain data remain within specific geographic boundaries; edge nodes ensure compliance by localizing storage and compute.

Real‑World Use Cases

1. Industrial Internet of Things (IIoT)

In modern factories, thousands of sensors monitor equipment health, product quality, and environmental conditions. Edge computing platforms analyze vibration patterns and temperature fluctuations in real time, predicting machine failures before they occur. By executing predictive maintenance locally, downtime is minimized, and production yields improve.

2. Autonomous Vehicles

Self‑driving cars and drones must interpret complex sensor inputs—LiDAR, RADAR, cameras—on the fly. The edge‑native AI modules within the vehicle fuse these data streams and make split‑second decisions for obstacle avoidance, path planning, and adaptive cruise control, all without relying on distant data centers.

3. Smart Retail & Digital Signage

Retail outlets employ edge‑powered cameras and analytics to understand shopper behavior, optimize store layouts, and deliver personalized promotions. Real‑time footfall analysis triggers dynamic pricing or targeted advertising on digital displays, boosting conversion rates and customer engagement.

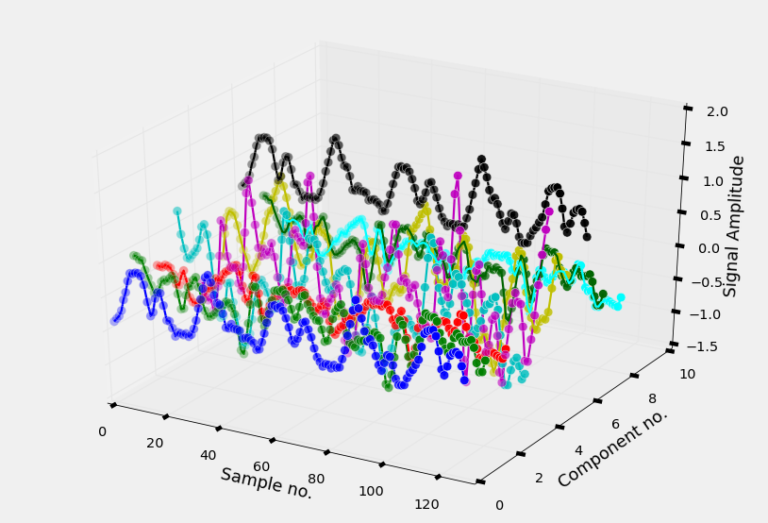

4. Healthcare & Remote Monitoring

Wearable devices and bedside monitors generate continuous streams of physiological data—heart rate, blood oxygen, EKG signals. Edge gateways within hospitals pre‑process these signals to detect arrhythmias or distress, alerting clinicians instantly. Additionally, at‑home patient monitors analyze readings locally and only transmit critical events, preserving bandwidth and patient privacy.

5. Energy & Utilities

Smart grids leverage edge nodes at substations to balance load, detect power quality issues, and respond instantly to grid disturbances. By processing SCADA data on site, utilities enhance reliability and optimize energy distribution without waiting for central commands.

Implementation Considerations

While the promise of edge computing is compelling, enterprises must navigate several challenges to realize its full potential:

A. Hardware Selection

Edge environments span from ruggedized industrial controllers to compact AI accelerators. Choosing devices that balance compute power, energy consumption, and cost is critical.

B. Software & Orchestration

Deploying and updating containerized applications across hundreds of edge sites requires robust orchestration platforms—often built on Kubernetes distributions optimized for edge.

C. Security & Trust

Edge nodes, often located in unsecured or remote sites, must be hardened against tampering. Secure boot, hardware root of trust, and encrypted communications are non‑negotiable.

D. Data Management

Defining which data to process locally versus the cloud, establishing retention policies, and ensuring data integrity across ephemeral connections demand clear governance frameworks.

E. Connectivity Variability

Network dropouts or bandwidth fluctuations can hinder updates and data synchronization. Solutions include offline‑first architectures and store‑and‑forward mechanisms.

Designing an Effective Edge Strategy

Enterprises embarking on an edge transformation should follow a structured approach:

Assess Workloads and Latency Requirements

Map out applications that demand real‑time processing versus those suitable for centralized analytics. Prioritize workloads where latency has the greatest business impact.Develop a Hybrid Architecture Blueprint

Clearly delineate responsibilities between edge nodes and cloud services. Define data pipelines, APIs, and integration points.Pilot with Representative Sites

Launch a small‑scale proof of concept in controlled environments—such as a single factory line or test vehicle fleet—to validate performance, reliability, and security.Leverage Edge‑Optimized Tools

Utilize orchestration platforms (e.g., K3s, Azure IoT Edge, AWS Greengrass) designed for constrained or intermittent networks.Establish Monitoring & Analytics

Implement centralized dashboards to track health, performance, and security events across all edge locations, ensuring rapid remediation.Iterate and Scale

Refine configurations, deploy software patches, and expand to additional sites based on insights from the pilot phase.

Future Trends and Innovations

The edge computing landscape continues to evolve rapidly:

Convergence with 5G and Private Networks

Telecom operators and enterprises are rolling out private 5G networks that deliver dedicated, ultra‑reliable, low‑latency connectivity tailored for edge deployments.AI‑Built‑In at the Edge

Next‑generation AI accelerators—such as Google’s Edge TPU and NVIDIA’s Jetson series—will enable more sophisticated on‑device inference, from natural language processing to generative AI.Serverless Edge Architectures

Emerging frameworks aim to bring the benefits of serverless computing—automatic scaling, pay‑per‑use billing—to distributed edge nodes.Digital Twins and Real‑Time Simulations

Digital twin replicas hosted at the edge will facilitate instantaneous ‘what‑if’ analyses for complex systems, from smart cities to power plants.Edge‑to‑Blockchain Integration

Secure, decentralized ledgers will record and verify edge transactions—ideal for supply chain traceability and federated data sharing.

Conclusion

Edge computing transcends traditional cloud‑centric models, delivering a new era of real‑time intelligence, operational resilience, and cost efficiency. By strategically deploying compute power at the network’s edge, organizations can harness instantaneous insights, optimize bandwidth usage, and ensure uninterrupted service—even in disconnected or regulated environments. From manufacturing floors to autonomous vehicles, edge architectures will underpin the next generation of digital transformation, empowering enterprises to innovate faster and stay ahead in an increasingly data‑driven world.